I find toy examples helpful in exploratory work.

So here is a toy example showing the pitfalls of forward selection of regression variables, in the presence of correlation between predictors. In other words, this is an example of the specification problem.

Suppose the true specification or regression is –

y = 20x1-11x2+10x3

and the observations on x2 and x3 in the available data are correlated.

To produce examples of this system, I create columns of random numbers in which the second and third columns are correlated with a correlation coefficient of around 0.6. I also add a random error term with zero mean and constant variance of 10. Then, after generating the data and the error terms, I apply the coefficients indicated above and estimate values for the dependent variable y.

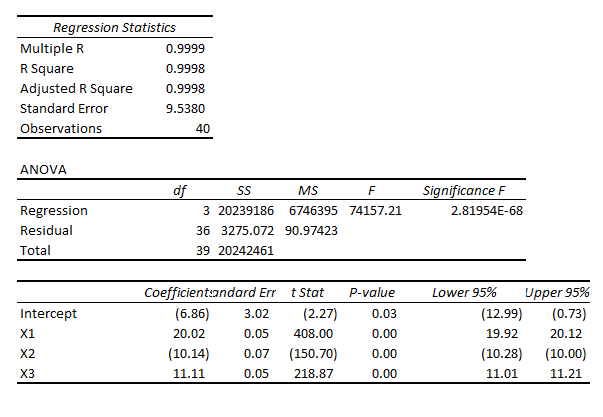

Then, specifying all three variables, x1, x2, and x3, I estimate regressions which characteristically have coefficient values not far from the (20,-11, 10), such as,

This, of course, is a regression output from Microsoft Excel, where I developed this simple Monte Carlo simulation which has 40 “observations.”

This, of course, is a regression output from Microsoft Excel, where I developed this simple Monte Carlo simulation which has 40 “observations.”

If you were lucky enough to estimate this regression initially, you well might stop and not bother about dropping variables to estimate other potentially competing models.

However, if you start with fewer variables, you encounter a significant difficulty.

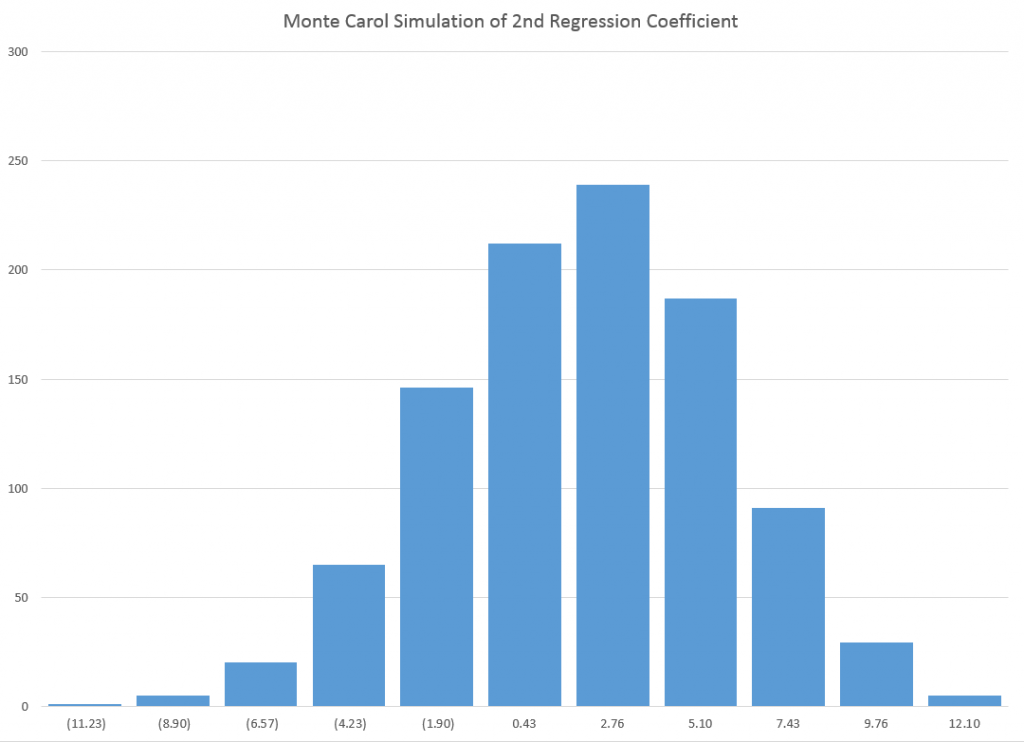

Here is the distribution of x2 in repeated estimates of a regression with explanatory variables x1 and x2 –

As you can see, the various estimates of the value of this coefficient, whose actual or true value is -11, are wide of the mark. In fact, none of the 1000 estimates in this simulation proved to be statistically significant at standard levels.

Using some flavors of forward regression, therefore, you well might decide to drop x2 in the specification and try including x3.

But you would have the same type of problem in that case, too, since x2 and x3 are correlated.

I sometimes hear people appealing to stability arguments in the face of the specification problem. In other words, they strive to find a stable set of core predictors, believing that if they can do this, they will have controlled as effectively as they can for this problem of omitted variables which are correlated with other variables that are included in the specification.