As Hal Varian writes in his popular Big Data: New Tricks for Econometrics the wealth of data now available to researchers demands new techniques of analysis.

In particular, often there is the problem of “many predictors.” In classic regression, the number of observations is assumed to exceed the number of explanatory variables. This obviously is challenged in the Big Data context.

Variable selection procedures are one tactic in this situation.

Readers may want to consult the post Selecting Predictors. It has my list of methods, as follows:

- Forward Selection. Begin with no candidate variables in the model. Select the variable that boosts some goodness-of-fit or predictive metric the most. Traditionally, this has been R-Squared for an in-sample fit. At each step, select the candidate variable that increases the metric the most. Stop adding variables when none of the remaining variables are significant. Note that once a variable enters the model, it cannot be deleted.

- Backward Selection. This starts with the superset of potential predictors and eliminates variables which have the lowest score by some metric – traditionally, the t-statistic.

- Stepwise regression. This combines backward and forward selection of regressors.

- Regularization and Selection by means of the LASSO. Here is the classic article and here is a post in this blog on the LASSO.

- Information criteria applied to all possible regressions – pick the best specification by applying the Aikaike Information Criterion (AIC) or Bayesian Information Criterion (BIC) to all possible combinations of regressors. Clearly, this is only possible with a limited number of potential predictors.

- Cross-validation or other out-of-sample criteria applied to all possible regressions– Typically, the error metrics on the out-of-sample data cuts are averaged, and the lowest average error model is selected out of all possible combinations of predictors.

- Dimension reduction or data shrinkage with principal components. This is a many predictors formulation, whereby it is possible to reduce a large number of predictors to a few principal components which explain most of the variation in the data matrix.

- Dimension reduction or data shrinkage with partial least squares. This is similar to the PC approach, but employs a reduction to information from both the set of potential predictors and the dependent or target variable.

Some more supporting posts are found here, usually with spreadsheet-based “toy” examples:

Three Pass Regression Filter, Partial Least Squares and Principal Components, Complete Subset Regressions, Variable Selection Procedures – the Lasso, Kernel Ridge Regression – A Toy Example, Dimension Reduction With Principal Components, bootstrapping, exponential smoothing, Estimation and Variable Selection with Ridge Regression and the LASSO

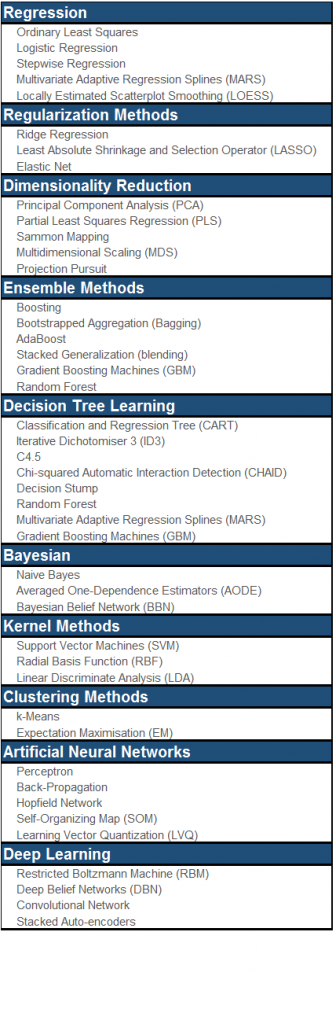

Plus one of the nicest infographics on machine learning – a related subject – is developed by the Australian blog Machine Learning Mastery.