Here are some notes on essential features of exponential smoothing.

- Name. Exponential smoothing (ES) algorithms create exponentially weighted sums of past values to produce the next (and subsequent period) forecasts. So, in simple exponential smoothing, the recursion formula is Lt=αXt+(1-α)Lt-1 where α is the smoothing constant constrained to be within the interval [0,1], Xt is the value of the time series to be forecast in period t, and Lt is the (unobserved) level of the series at period t. Substituting the similar expression for Lt-1 we get Lt=αXt+(1-α) (αXt-1+(1-α)Lt-2)= αXt+α(1-α)Xt-1+(1-α)2Lt-2, and so forth back to L1. This means that more recent values of the time series X are weighted more heavily than values at more distant times in the past. Incidentally, the initial level L1 is not strongly determined, but is established by one ad hoc means or another – often by keying off of the initial values of the X series in some manner or another. In state space formulations, the initial values of the level, trend, and seasonal effects can be included in the list of parameters to be established by maximum likelihood estimation.

- Types of Exponential Smoothing Models. ES pivots on a decomposition of time series into level, trend, and seasonal effects. Altogether, there are fifteen ES methods. Each model incorporates a level with the differences coming as to whether the trend and seasonal components or effects exist and whether they are additive or multiplicative; also whether they are damped. In addition to simple exponential smoothing, Holt or two parameter exponential smoothing is another commonly applied model. There are two recursion equations, one for the level Lt and another for the trend Tt, as in the additive formulation, Lt=αXt+(1-α)(Lt-1+Tt-1) and Tt=β(Lt– Lt-1)+(1-β)Tt-1 . Here, there are now two smoothing parameters, α and β, each constrained to be in the closed interval [0,1]. Winters or three parameter exponential smoothing, which incorporates seasonal effects, is another popular ES model.

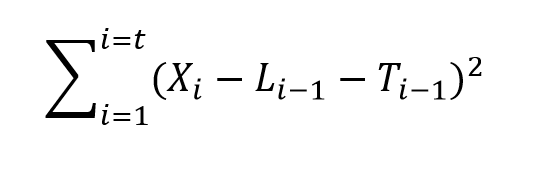

- Estimation of the Smoothing Parameters. The original method of estimating the smoothing parameters was to guess their values, following guidelines like “if the smoothing parameter is near 1, past values will be discounted further” and so forth. Thus, if the time series to be forecast was very erratic or variable, a value of the smoothing parameter which was closer to zero might be selected, to achieve a longer period average. The next step is to set up a sum of the squared differences of the within sample predictions and minimize these. Note that the predicted value of Xt+1 in the Holt or two parameter additive case is Lt+Tt, so this involves minimizing the expression

Currently, the most advanced method of estimating the value of the smoothing parameters is to express the model equations in state space form and utilize maximum likelihood estimation. It’s interesting, in this regard, that the error correction version of ES recursion equations are a bridge to this approach, since the error correction formulation is found at the very beginnings of the technique. Advantages of using the state space formulation and maximum likelihood estimation include (a) the ability to estimate confidence intervals for point forecasts, and (b) the capability of extending ES methods to nonlinear models.

Currently, the most advanced method of estimating the value of the smoothing parameters is to express the model equations in state space form and utilize maximum likelihood estimation. It’s interesting, in this regard, that the error correction version of ES recursion equations are a bridge to this approach, since the error correction formulation is found at the very beginnings of the technique. Advantages of using the state space formulation and maximum likelihood estimation include (a) the ability to estimate confidence intervals for point forecasts, and (b) the capability of extending ES methods to nonlinear models. - Comparison with Box-Jenkins or ARIMA models. ES began as a purely applied method developed for the US Navy, and for a long time was considered an ad hoc procedure. It produced forecasts, but no confidence intervals. In fact, statistical considerations did not enter into the estimation of the smoothing parameters at all, it seemed. That perspective has now changed, and the question is not whether ES has statistical foundations – state space models seem to have solved that. Instead, the tricky issue is to delineate the overlap and differences between ES and ARIMA models. For example, Gardner makes the statement that all linear exponential smoothing methods have equivalent ARIMA models. Hyndman points out that the state space formulation of ES models opens the way for expressing nonlinear time series – a step that goes beyond what is possible in ARIMA modeling.

- The Importance of Random Walks. The random walk is a forecasting benchmark. In an early paper, Muth showed that a simple exponential smoothing model provided optimal forecasts for a random walk. The optimal forecast for a simple random walk is the current period value. Things get more complicated when there is an error associated with the latent variable (the level). In that case, the smoothing parameter determines how much of the recent past is allowed to affect the forecast for the next period value.

- Random Walks With Drift. A random walk with drift, for which a two parameter ES model can be optimal, is an important form insofar as many business and economic time series appear to be random walks with drift. Thus, first differencing removes the trend, leaving ideally white noise. A huge amount of ink has been spilled in econometric investigations of “unit roots” – essentially exploring whether random walks and random walks with drift are pretty much the whole story when it comes to major economic and business time series.

- Advantages of ES. ES is relatively robust, compared with ARIMA models, which are sensitive to mis-specification. Another advantage of ES is that ES forecasts can be up and running with only a few historic observations. This comment applied to estimation of the level and possibly trend, but does not apply in the same degree to the seasonal effects, which usually require more data to establish. There are a number of references which establish the competitive advantage in terms of the accuracy of ES forecasts in a variety of contexts.

- Advanced Applications.The most advanced application of ES I have seen is the research paper by Hyndman et al relating to bagging exponential smoothing forecasts.

The bottom line is that anybody interested in and representing competency in business forecasting should spend some time studying the various types of exponential smoothing and the various means to arrive at estimates of their parameters.

For some reason, exponential smoothing reaches deep into actual process in data generation and consistently produces valuable insights into outcomes.