Miles Kimball is a Professor at the University of Michigan, and a vocal and prolific proponent of negative interest rates. His Confessions of a Supply-Side Liberal is peppered with posts on the benefits of negative interest rates.

March 2 Even Central Bankers Need Lessons on the Transmission Mechanism for Negative Interest Rates, after words of adoration, takes the Governor of the Bank of England (Mark Carney) to task. Carney’s problem? Carney wrote recently that unless regular households face negative interest rates in their deposit accounts.. negative interest rates only work through the exchange rate channel, which is zero-sum from a global point of view.

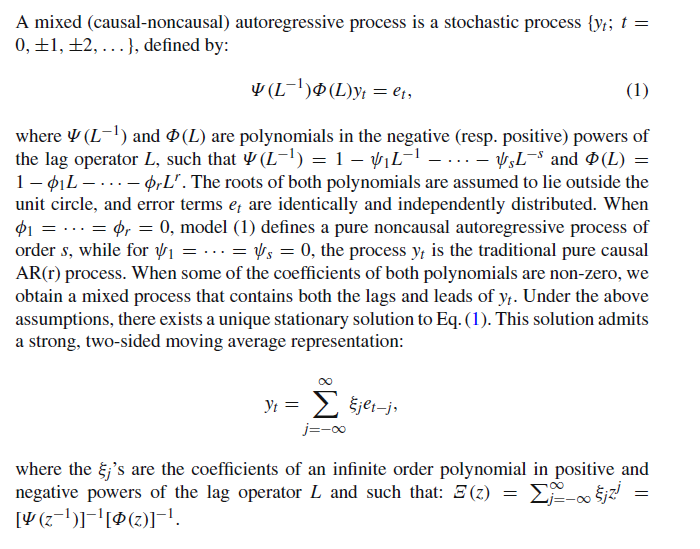

Kimball’s argument is a little esoteric, but promotes three ideas.

First, negative interest rates central bank charge member banks on reserves should be passed onto commercial and consumer customers with larger accounts – perhaps with an exemption for smaller checking and savings accounts with, say, less than $1000.

Second, moving toward electronic money in all transactions makes administration of negative interest rates easier and more effective. In that regard, it may be necessary to tax transactions conducted in paper money, if a negative interest rate regime is in force.

Third, impacts on bank profits can be mitigated by providing subsidies to banks in the event the central bank moved into negative interest rate territory.

Fundamentally, Kimball’s view is that.. monetary policy–and full-scale negative interest rate policy in particular–is the primary answer to the problem of insufficient aggregate demand. No need to set inflation targets above zero in order to get the economy moving. Just implement sufficiently negative interest rates and things will rebound quickly.

Kimball’s vulnerability is high mathematical excellence coupled with a casual attitude toward details of actual economic institutions and arrangements.

For example, in his Carney post, Kimball offers this rather tortured prose under the heading -“Why Wealth Effects Would Be Zero With a Representative Household” –

It is worth clarifying why the wealth effects from interest rate changes would have to be zero if everyone were identical [sic, emphasis mine]. In aggregate, the material balance condition ensures that flow of payments from human and physical capital have not only the same present value but the same time path and stochastic pattern as consumption. Thus–apart from any expansion of the production of the economy as a whole as a result of the change in monetary policy–any effect of interest rate changes on the present value of society’s assets overall is cancelled out by the effect of interest rate changes on the present value of the planned path and pattern of consumption. Of course, what is actually done will be affected by the change in interest rates, but the envelope theorem says that the wealth effects can be calculated based on flow of payments and consumption flows that were planned initially.

That’s in case you worried a regime of -2 percent negative interest rates – which Kimball endorses to bring a speedy end to economic stagnation – could collapse the life insurance industry or wipe out pension funds.

And this paragraph is troubling from another standpoint, since Kimball believes negative interest rates or “monetary policy” can trigger “expansion of the production of the economy as a whole.” So what about those wealth effects?

Indeed, later in the Carney post he writes,

..for any central bank willing to go off the paper standard, there is no limit to how low interest rates can go other than the danger of overheating the economy with too strong an economic recovery. If starting from current conditions, any country can maintain interest rates at -7% or lower for two years without overheating its economy, then I am wrong about the power of negative interest rates. But in fact, I think it will not take that much. -2% would do a great deal of good for the eurozone or Japan, and -4% for a year and a half would probably be enough to do the trick of providing more than enough aggregate demand.

At the end of the Carney post, Kimball links to a list of his and other writings on negative interest rates called How and Why to Eliminate the Zero Lower Bound: A Reader’s Guide. Worth bookmarking.

Here’s a YouTube video.

Although not completely fair, I have to say all this reminds me of a widely-quoted passage from Keynes’ General Theory –

“Practical men who believe themselves to be quite exempt from any intellectual influence, are usually the slaves of some defunct economist. Madmen in authority, who hear voices in the air, are distilling their frenzy from some academic scribbler of a few years back”

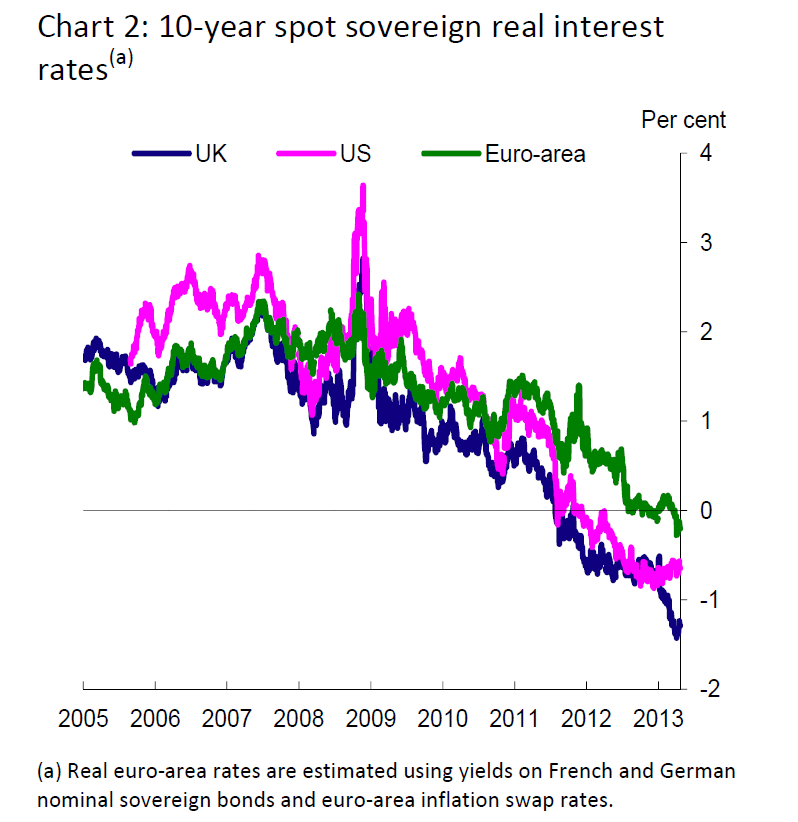

Of course, the policy issue behind the spreading adoption of negative interest rates is that the central banks of the world are, in many countries, at the zero bound already. Thus, unless central banks can move into negative interest rate territory, governments are more or less “out of ammunition” when it comes to combatting the next recession – assuming, of course, that political alignments currently favoring austerity over infrastructure investment and so forth, are still in control.

The problem I have might be posed as one of “complexity theory.”

I myself have spent hours pouring over optimal control models of consumption and dynamic general equilibrium. This stuff is so rarified and intellectually challenging, really, that it produces a mindset that suggests mastery of Portryagin’s maximum principle in a multi-equation setup means you have something relevant to say about real economic affairs. In fact, this may be doubtful, especially when the linkages between organizations are so complex, especially dynamically.

The problem, indeed, may be institutional but from a different angle. Economics departments in universities have, as their main consumer, business school students. So economists have to offer something different.

One would hope machine learning, Big Data, and the new predictive analytics, framed along the lines outlined by Hal Varian and others, could provide an alternative paradigm for economists – possibly rescuing them from reliance on adjusting one number in equations that are stripped of the real, concrete details of economic linkages.