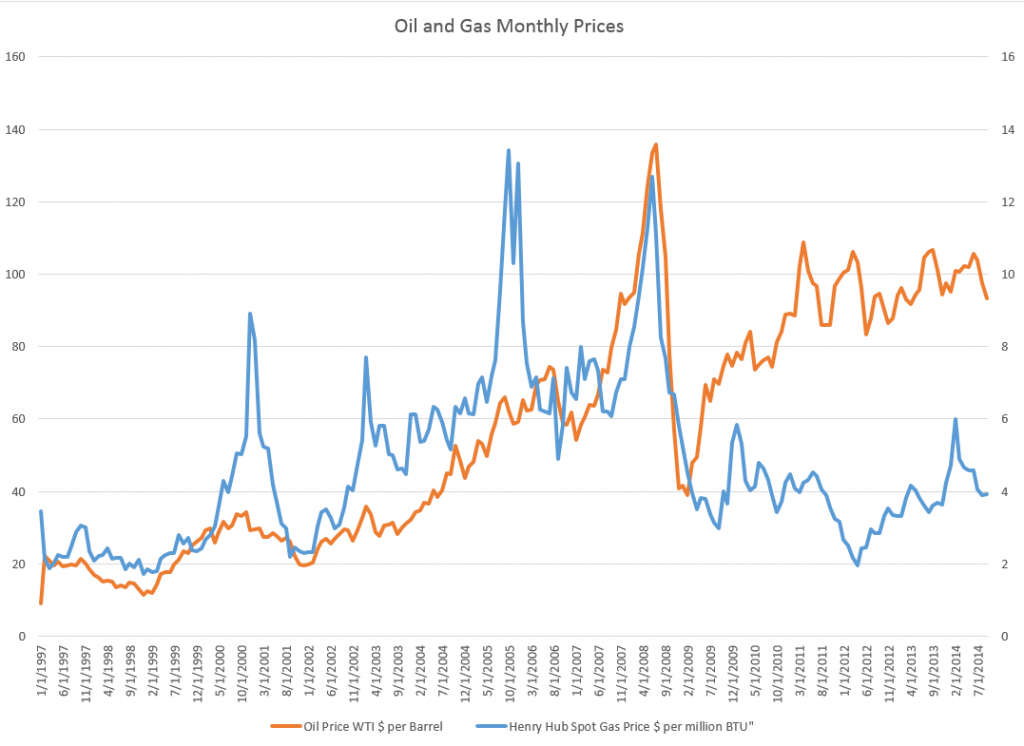

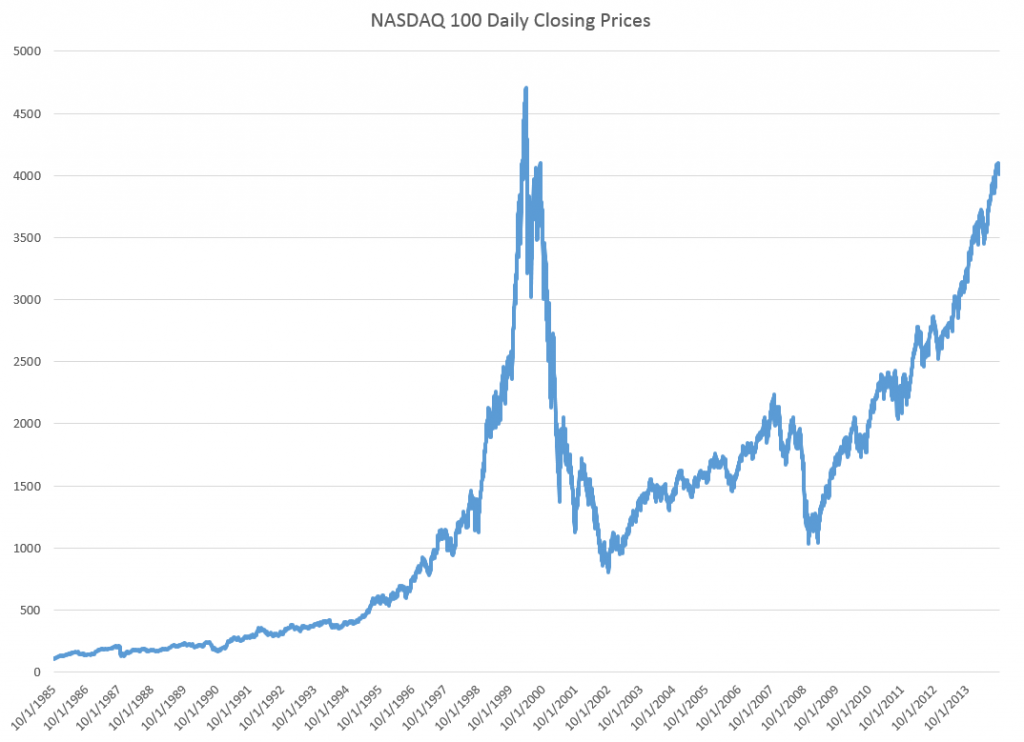

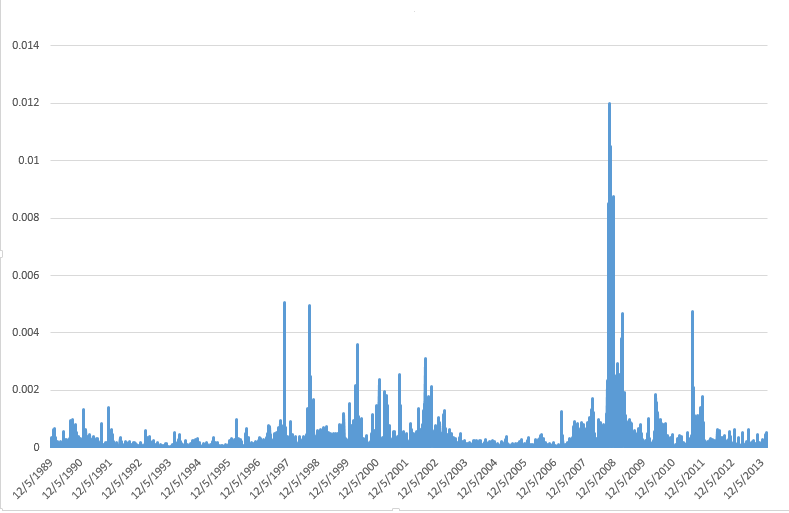

One of the more interesting questions in applied forecasting is the relationship between oil and natural gas prices in the US market, shown below.

Up to the early 1990’s, the interplay between oil and gas prices followed “rules of thumb” – for example, gas prices per million Btu were approximately one tenth oil prices.

There is still some suggestion of this – for example, peak oil prices recently hit nearly $140 a barrel, at the same time gas prices were nearly $14 per million Btu’s.

However, generally, ratio relationships appear to break down around 2009, if not earlier, during the first decade of the century.

A Longer Term Relationship?

Perhaps oil and gas prices are in a longer term relationship, but one disturbed in many cases in short run time periods.

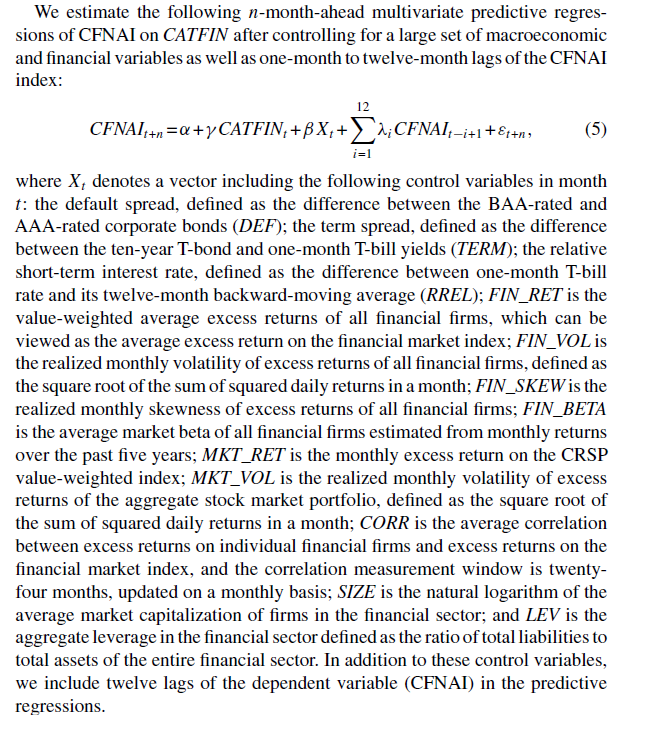

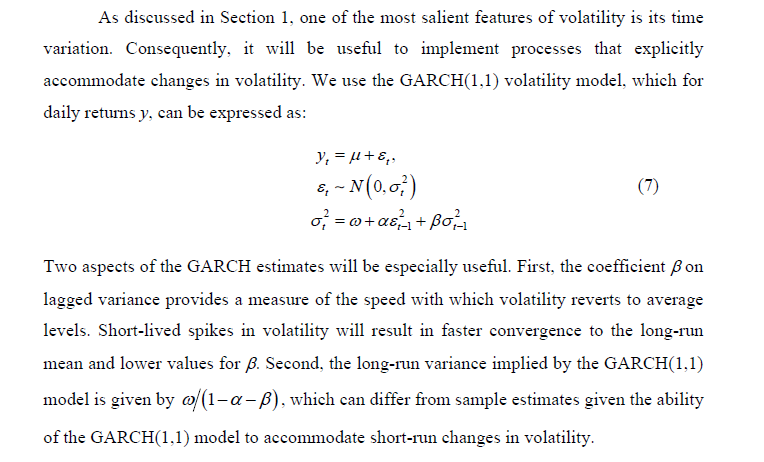

One way economists and ecommetricians think of this is in terms of “co-integrating relationships.” That’s a fancy way of saying that regressions of the form,

Gas price in time t = constant + α(oil price in time t) + (residual in time t)

are predictive. Here, α is a coefficient to be estimated.

Now this looks like a straight-forward regression, so you might say – “what’s the problem?”

Well, the catch is that gas prices and oil prices might be nonstationary – that is, one or another form of a random walk.

If this is so – and positive results on standard tests such as the augmented Dickey Fuller (ADR) and Phillips-Peron are widely reported – there is a big potential problem. It’s easy to regress one completely unrelated nonstationary time series onto another, getting an apparently significant result, only to find this relationship disappears in the forecast. In other words two random series can, by chance, match up to each other over closely, but that’s no guarantee they will continue to do so.

Here’s where the concept of a co-integrating relationship comes into play.

If you can show, by various statistical tests, that variables are cointegrated, regressions such as the one above are more likely to be predictive.

Well, several econometric studies show gas and oil prices are in a cointegrated relationship, using data from the 1990’s through sometime in the first decade of the 2000’s. The more sophisticated specify auxiliary variables to account for weather or changes in gas storage. You might download and read, for example, a study published in 2007 under the auspices of the Dallas Federal Reserve Bank – What Drives Natural Gas Prices?

But it does not appear that this cointegrated relationship is fixed. Instead, it changes over time, perhaps exemplifying various regimes, i.e. periods of time in which the underlying parameters switch to new values, even though a determinate relationship can still be demonstrated.

Changing parameters are shown in the excellent 2012 study by Ramberg and Parsons in the Energy Journal – The Weak Tie Between Natural Gas and Oil Prices.

The Underlying Basis

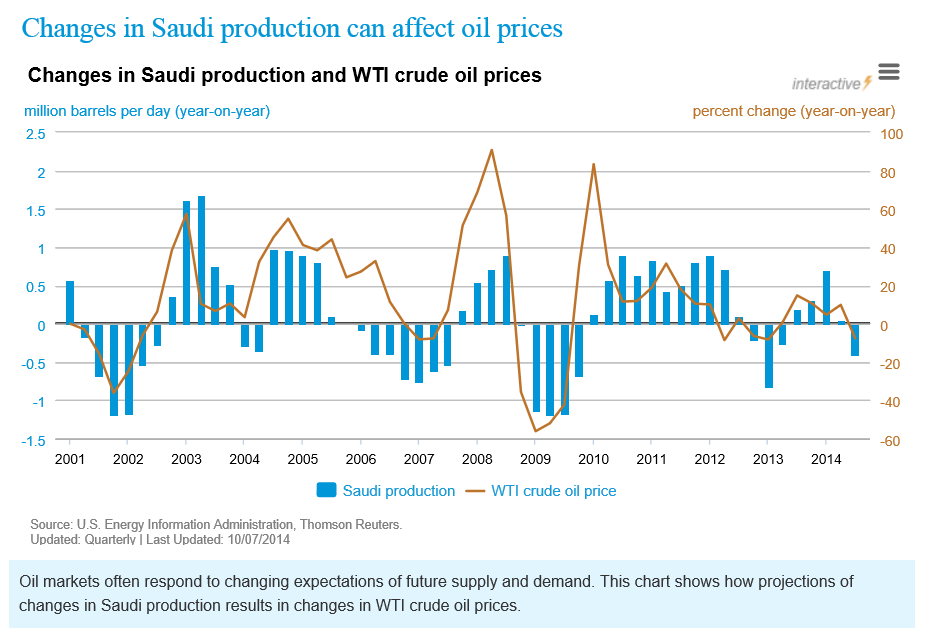

Anyway, there are facts relating to production and use of oil and natural gas which encourage us to postulate a relationship in their prices, although the relationship may shift over time.

This makes sense since oil and gas are limited or completely substitutes in various industrial processes. This used to be more compelling in electric power generation, than it is today. According to the US Department of Energy, there are only limited amounts of electric power still produced by generators running on oil, although natural gas turbines have grown in importance.

Still, natural gas is often produced alongside of and is usually dissolved in oil, so oil and natural gas are usually joint products.

Recently, technology has changed the picture with respect to gas and oil.

On the demand side, the introduction of the combined-cycle combustion turbine made natural gas electricity generation more cost effective, thereby making natural gas in electric power generation even more dominant.

On the demand side, the new technologies of extracting shale oil and natural gas – often summarized under the rubric of “fracking” or hydraulic fracturing – have totally changed the equation, resulting in dramatic increases in natural gas supplies in the US.

This leaves the interesting question of what sort of forecasting model for natural gas might be appropriate.