I’m instituting Video Friday. It’s the end of the work week, and videos introduce novelty and pleasant change in communications.

And we can keep focusing on matters related to forecasting applications and data analytics, or more generally on algorithmic guides to action.

Today I’m focusing on D-Wave and quantum computing. This could well could take up several Friday’s, with cool videos on underlying principles and panel discussions with analysts from D-Wave, Google and NASA. We’ll see. Probably, I will treat it as a theme, returning to it from time to time.

A couple of introductory comments.

First of all, David Wineland won a Nobel Prize in physics in 2012 for his work with quantum computing. I’ve heard him speak, and know members of his family. Wineland did his work at the NIST Laboratories in Boulder, the location for Eric Cornell’s work which was awarded a Nobel Prize in 2001.

I mention this because understanding quantum computing is more or less like trying to understand quantum physics, and, there, I think engineering has a role to play.

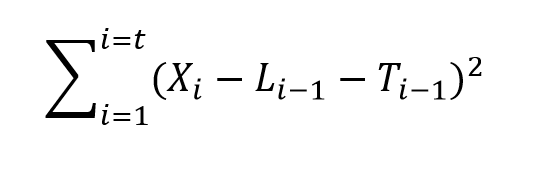

The basic concept is to exploit quantum superimposition, or perhaps quantum entanglement, as a kind of parallel processor. The qubit, or quantum bit, is unlike the bit of classical computing. A qubit can be both 0 and 1 simultaneously, until it’s quantum wave equation is collapsed or dispersed by measurement. Accordingly, the argument goes, qubits scale as powers of 2, and a mere 500 qubits could more than encode all atoms in the universe. Thus, quantum computers may really shine at problems where you have to search through all different combinations of things.

But while I can write the quantum wave equation of Schrodinger, I don’t really understand it in any basic sense. It refers to a probability wave, whatever that is.

Feynman, whose lectures (and tapes or CD’s) on physics I proudly own, says it is pointless to try to “understand” quantum weirdness. You have to be content with being able to predict outcomes of quantum experiments with the apparatus of the theory. The theory is highly predictive and quite successful, in that regard.

So I think D-Wave is really onto something. They are approaching the problem of developing a quantum computer technologically.

Here is a piece of fluff Google and others put together about their purchase of a D-Wave computer and what’s involved with quantum computing.

OK, so now here is Eric Ladizinsky in a talk from April of this year on Evolving Scalable Quantum Computers. I can see why Eric gets support from DARPA and Bezos, a range indeed. You really get the “ah ha” effect listening to him. For example, I have never before heard a coherent explanation of how the quantum weirdness typical for small particles gets dispersed with macroscopic scale objects, like us. But this explanation, which is mathematically based on the wave equation, is essential to the D-Wave technology.

It takes more than an hour to listen to this video, but, maybe bookmark it if you pass on from a full viewing, since I assure you that this is probably the most substantive discussion I have yet found on this topic.

But is D-Wave’s machine a quantum computer?

Well, they keep raising money.

D-Wave Systems raises $30M to keep commercializing its quantum computer

But this infuriates some in the academic community, I suspect, who distrust the announcement of scientific discovery by the Press Release.

There is a brilliant article recently in Wired on D-Wave, which touches on a recent challenge to its computational prowess (See Is D-Wave’s quantum computer actually a quantum computer?)

The Wired article gives Geordie Rose, a D-Wave founder, space to rebut at which point these excellent comments can be found:

Rose’s response to the new tests: “It’s total bullshit.”

D-Wave, he says, is a scrappy startup pushing a radical new computer, crafted from nothing by a handful of folks in Canada. From this point of view, Troyer had the edge. Sure, he was using standard Intel machines and classical software, but those benefited from decades’ and trillions of dollars’ worth of investment. The D-Wave acquitted itself admirably just by keeping pace. Troyer “had the best algorithm ever developed by a team of the top scientists in the world, finely tuned to compete on what this processor does, running on the fastest processors that humans have ever been able to build,” Rose says. And the D-Wave “is now competitive with those things, which is a remarkable step.”

But what about the speed issues? “Calibration errors,” he says. Programming a problem into the D-Wave is a manual process, tuning each qubit to the right level on the problem-solving landscape. If you don’t set those dials precisely right, “you might be specifying the wrong problem on the chip,” Rose says. As for noise, he admits it’s still an issue, but the next chip—the 1,000-qubit version codenamed Washington, coming out this fall—will reduce noise yet more. His team plans to replace the niobium loops with aluminum to reduce oxide buildup….

Or here’s another way to look at it…. Maybe the real problem with people trying to assess D-Wave is that they’re asking the wrong questions. Maybe his machine needs harder problems.

On its face, this sounds crazy. If plain old Intels are beating the D-Wave, why would the D-Wave win if the problems got tougher? Because the tests Troyer threw at the machine were random. On a tiny subset of those problems, the D-Wave system did better. Rose thinks the key will be zooming in on those success stories and figuring out what sets them apart—what advantage D-Wave had in those cases over the classical machine…. Helmut Katzgraber, a quantum scientist at Texas A&M, cowrote a paper in April bolstering Rose’s point of view. Katzgraber argued that the optimization problems everyone was tossing at the D-Wave were, indeed, too simple. The Intel machines could easily keep pace..

In one sense, this sounds like a classic case of moving the goalposts…. But D-Wave’s customers believe this is, in fact, what they need to do. They’re testing and retesting the machine to figure out what it’s good at. At Lockheed Martin, Greg Tallant has found that some problems run faster on the D-Wave and some don’t. At Google, Neven has run over 500,000 problems on his D-Wave and finds the same....

..it may be that quantum computing arrives in a slower, sideways fashion: as a set of devices used rarely, in the odd places where the problems we have are spoken in their curious language. Quantum computing won’t run on your phone—but maybe some quantum process of Google’s will be key in training the phone to recognize your vocal quirks and make voice recognition better. Maybe it’ll finally teach computers to recognize faces or luggage. Or maybe, like the integrated circuit before it, no one will figure out the best-use cases until they have hardware that works reliably. It’s a more modest way to look at this long-heralded thunderbolt of a technology. But this may be how the quantum era begins: not with a bang, but a glimmer.